- PagerDuty /

- Engineering Blog /

- How We Evolved Our Tech Interview Process at PagerDuty

Engineering Blog

How We Evolved Our Tech Interview Process at PagerDuty

In a tech interview, the relationship between the candidate and the company is a two way street; applicants seek exciting companies in the same way organizations search for strong candidates. At PagerDuty, we recently identified a gap in our interview process that wasn’t showing candidates who we are as a company and also didn’t give candidates the opportunity to show us their best selves. So we set about to fill this gap.

In this blog, I’ll talk about the legacy interview processes we came from and how we’ve transformed them to encourage success for both the candidates and PagerDuty. I hope that our learnings will benefit you in improving your interview processes as well.

The Background

Consider the following questions:

Have you tackled similar problems before? Did these take you a considerably long time to solve? Did you find the problem interesting?

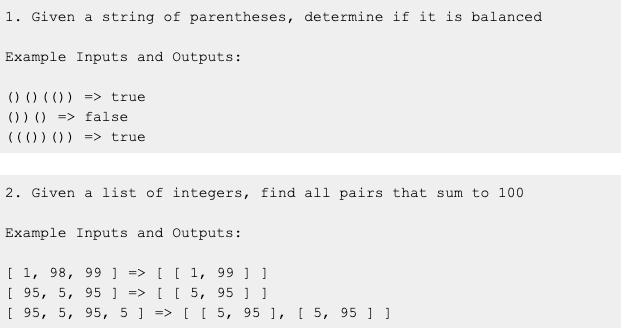

For a long time, these two questions were the bread and butter for our engineering technical interviews at PagerDuty. They served as quick technical phone screens for all of our full-time engineering candidates. They were easy to implement into the interview process, they had an obvious “good or bad” solution, and they were easily doable within a half-hour or hour-long interview. It wasn’t long before we started to use these questions to evaluate our Career Accelerator Program (or CAP, our internship program) candidates as the sole interview slot.

After a while, we had refreshed and evolved our technical phone screen into a new slot for our full-time candidates. Meanwhile, CAP interviews still lagged behind and remained the same. We would only ask candidates to complete either balanced parentheses or sum-to-100, then wrap up the interview.

Soon, we realized we had a problem.

The Problem

Over time, these kinds of algorithmic questions pose a problem in our ability to fairly evaluate candidates. The biggest issue actually stems from what gives these types of questions their strengths: the fact that they are so standardized. It’s not uncommon to hear from candidates that they have seen the problems before on coding challenge websites like HackerRank or LeetCode, or even within the basic algorithms course they took during their time in college or university. These flaws became clearer and clearer as we conducted more and more interviews.

After every interview, we hold a sync-up meeting to evaluate each candidate on three categories: culture, code, and productivity. Conversations like the following would happen quite often:

Interviewer 1: So what are your thoughts on the candidate?

Interviewer 2: I thought they did pretty well. Fairly standard answers to the soft questions we had asked. So no red flags for me there in terms of culture fit. In terms of technical, they got the solution pretty quickly and smoothly. I’d say this puts them pretty high on the productivity scale.

Interviewer 1: I agree. They even had an optimal solution. Actually, I wonder if they have seen this problem before.

Interviewer 2: Well, when we had asked, they did say that this was the first time they had seen this specific problem. However, do you think they’ve at least seen a _similar variation_ of this problem before?

Interviewer 1: I’m not entirely sure.. However, I would like to give them the benefit of the doubt. Now.. How would we rate them on their code?

Interviewer 2: I guess it would be a pass? The solution was pretty straightforward after all. It went without a hitch and there wasn’t too much wrestling with the code.

Needless to say, culture, code, and productivity were really weak indicators for a slot like this. Because of this evaluation gap, we ended up having an extremely high pass rate for candidates. Eventually, scores became less and less meaningful, which made the jobs of our interviewers and recruiters much more difficult.

The Requirement

After years of dealing with the problems, we finally decided that we wanted to completely replace the slot we were conducting, and so we started to formulate some ideas on what we would like a new slot to look like.

As an interviewer, it was very difficult to separate the candidates that had genuinely solved the problem on their own from the candidates that had prior experience in solving one-dimensional, purely algorithmic questions. This made scoring uneffective and moot. So we thought to ourselves that this was the obvious thing to address and would act as a good starting point.

Around this time, we had begun transforming our internship program into the Career Accelerator Program as it is known today. This meant placing a heavier focus on recruiting from all backgrounds, regardless of academic experience. This was another nail in the coffin for our balanced parentheses and sum-to-100 questions as they gave significant advantage to candidates that have seen these types of questions before from typical computer science or engineering programs. So in essence, we had to design a more thoughtful slot to ensure that candidates of various backgrounds had a fair playing field. For example, these would be candidates who are looking for a career switch, did not receive a traditional post-secondary education, or are returning to the workforce after an extended leave, etc.

The Solution

In order to meet our requirements, we had to design a slot that was flexible and extensible. In the end, the below is what we came up with.

Candidates chosen for an interview would be given two take-home tasks to complete prior to attending the actual interview, which is conducted over Zoom.

The first task: Candidates have to provide us written responses to a few short questions so we can get to know them a bit better and to clear up some of the administrative logistics in the hiring process. For example, we would ask candidates to describe a past project that they are proud of. Through these responses, we get a very high-level gauge of culture fit.

What about productivity and code? Well, for the second task, we ask them to complete a small, lightweight, minimal viable product for a food application. The app should support three simple features (which we ask for), and is all-in-all about half an hour’s worth of work. We give the candidates complete freedom over what technologies to use to build it out, the architecture of the app, and the user experience of the product.

Then, when interview day arrives, the candidate and interviewer work together to extend the food application and add new requested features, which we’ve designed as part of the slot. This helps us gauge their ability to parse through feature requirements and whether they are able to explain any assumptions or decisions that they make.

The Good and the Bad

This new interview format addresses a lot of the requirements we had laid out and provides some other added benefits as well.

Because of the open-ended nature of the interview, the evaluation process is a lot more multi-faceted. We can now take other variables into account as opposed to just judging whether or not a candidate had the right solution. In fact, now there is no “right” solution. How the candidate structures their codebase, how the candidate adapts existing code to new requirements, how the candidate makes use of tools they’re familiar with: these are all examples of things that suddenly started to play a big part in informing us on how someone should be evaluated. Furthermore, this format allows us to more naturally lend a helping hand or give suggestions without giving away the answer. It creates a great opportunity for pairing and determining the candidate’s coachability and how they receive feedback.

In addition, the new slot more accurately reflects the type of work that a product developer typically does day-to-day here at PagerDuty. Instead of testing candidates on whether they are able to think in a certain way (as forced by algorithms questions), it’s more important to test whether or not how they think and approach problems would help them succeed in accomplishing their day-to-day tasks. This would be vital in enabling us to more accurately and more fairly assess candidates from all walks of life, regardless of whether or not they’ve had a technical academic background.

However, these new advantages came at a cost. As we began to train new interviewers, run mock interviews, and test the new process out in the wild, we soon realized that we needed a bit more structure than we initially thought. Every interview was different in some way, shape, or form, based on what the candidate brings into the session. As such, it was difficult as an interviewer to know where to start when evaluating candidates post-interview and to ensure that we were looking at the same criteria across interviewers and interview sessions. To combat this, we had decided to create a rubric, similar in structure to what you may have seen back in grade school. We list out typical areas to look out for on the Y-axis and levels of performance on the X-axis. Finally, we provide specific examples for each level and evaluation criterion. Of course, the rubric does not prescribe an evaluation, but instead acts as a guide for the interviewer.

An example of one of the rubrics we use.

In addition, a fair number of test candidates had not seen this new structure of interview before at other companies. As such, there was a disconnect between the expectations set by them and by ourselves. While we ask for a very simple skeleton application to be done as part of the take-home exercise, some candidates come into the interview session with a lot of extraneous features and gold plating. We found that most times, candidates who brought in more than we had asked often found themselves running into hurdles and being bogged down by the extra bits when it came time to extend their application’s functionality. It took us multiple iterations of changing the wording around in the initial requirements before we were at a level where we had enough emphasis on keeping things simple.

Despite the tradeoffs of the new slot, the good news is that it was a small price to pay for the results that we saw.

The Results

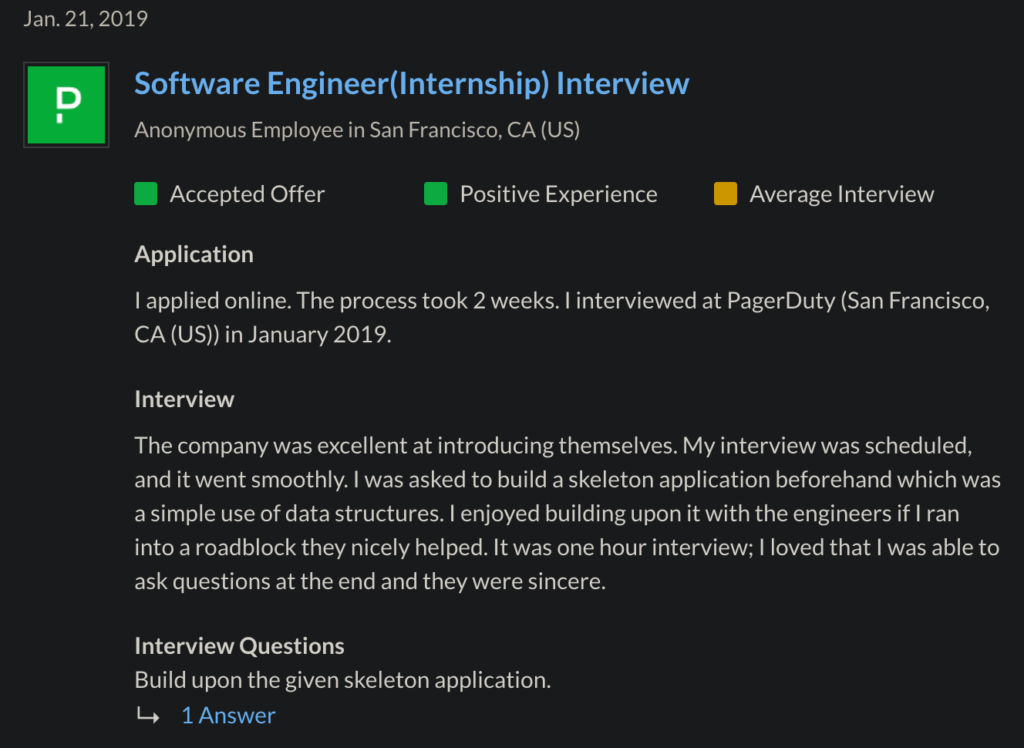

Based on conversations with interns in the past, we’ve heard repeatedly that our old interview slot was too easy and not indicative of the type of work we do here at PagerDuty. After we had started testing the new interview, it wasn’t long before we got our first response from a candidate on Glassdoor.

Our first Glassdoor feedback about the new interview slot.

We had received this post when we were still conducting the new slot in our very first small batch of candidates. This piece of feedback gave us the confidence we needed to move on to training more interviewers and migrating more of our scheduled interviews to the new process.

Fast forward to April 2019, and we had fully deprecated the old interview. By December 2019, all of our interns hired via the new interview slot were wrapping up their terms at PagerDuty. Right before their contracts ended, we started to gather feedback from interns regarding their interview experience. Here’s a sampling of what we heard:

I found [building a food application] much more enjoyable than standard algorithm problems. With the new slot, I was able to demonstrate my skills as a software engineer more effectively. With algorithmic problems such as balanced parenthesis/sum-to-100 I think they’re decent at showing problem-solving skills but they have limited overlap to what software engineering is actually about.

I found the process to be much less stressful than typical interviews involving algorithmic problems. Since I was able to whip up a skeleton beforehand, I felt more comfortable during the interview.

I found it a lot of fun and liked that the concept was to test our practical programming skills as opposed to interview technical question skills … I remember thinking that the interview format was really refreshing at the time since it was the only interview I did in this format!

These weren’t uncommon cases. There was more positive feedback. Whether it was conveyed during the interview itself or after the fact or from someone who did not end up at PagerDuty — it was a good outlook overall.

The new slot had set out to address a lot of the shortcomings of our previous one, and based on what we have heard, it’s getting the job done. Old algorithmic questions just aren’t cutting it anymore for hiring the best candidates, and are so boring in our current landscape. Similar to our way of taking in feedback and continuously learning to formulate better processes for our day-to-day, it is important that we exercise the same kind of reflection when we look at our hiring and interviewing. This is something that I would personally like to see more of from our industry.

Let’s evolve how we interview. Allowing creative freedom in our interviews gives candidates a sense of being empowered — empowered to think outside the box, empowered to take their own approach, and empowered to make the right decisions.

Interested in seeing the interview process yourself? Check out our Career Accelerator Program and submit an application!

Credits given to Ian Minoso, who was instrumental in being a part of the whole interview revamp journey.